Building My AI Plant Assistant: An E2E Journey

machine learning devops mobile app development AI automation

Imagine being able to diagnose plant diseases instantly, right from your smartphone. That was my initial spark – to create a mobile app powered by AI that could identify plant ailments with a simple photo. The dream was clear, involving training effective models and developing a user-friendly mobile application. However, the reality of manually labeling countless images, painstakingly tweaking model parameters, and wrestling with app deployment proved far more time-consuming and complex than I initially anticipated.

My initial workflow felt chaotic: manually labeling images, training models using Google Colab, converting them to mobile-friendly formats, and then struggling to integrate them seamlessly into my React Native mobile app. This iterative process felt like a never-ending cycle, a true Sisyphean task.

That’s why I embarked on a mission to build a fully automated, end-to-end(E2E) system. The goal was to automate everything from data labeling and versioning to model training, optimization, and deployment. This would free me to focus on the core AI research and development, rather than getting bogged down in repetitive, manual tasks.

The Vision: Why Automate Plant Disease Detection?

My core reasons for investing time into this automation:

Faster Iteration

Improved Accuracy

Scalability

Whether you’re a seasoned researcher, a passionate hobbyist, or a budding developer, this E2E approach can empower you to build and deploy AI-powered solutions more efficiently and effectively. The principles I’ve applied here are broadly applicable to other AI-driven projects as well.

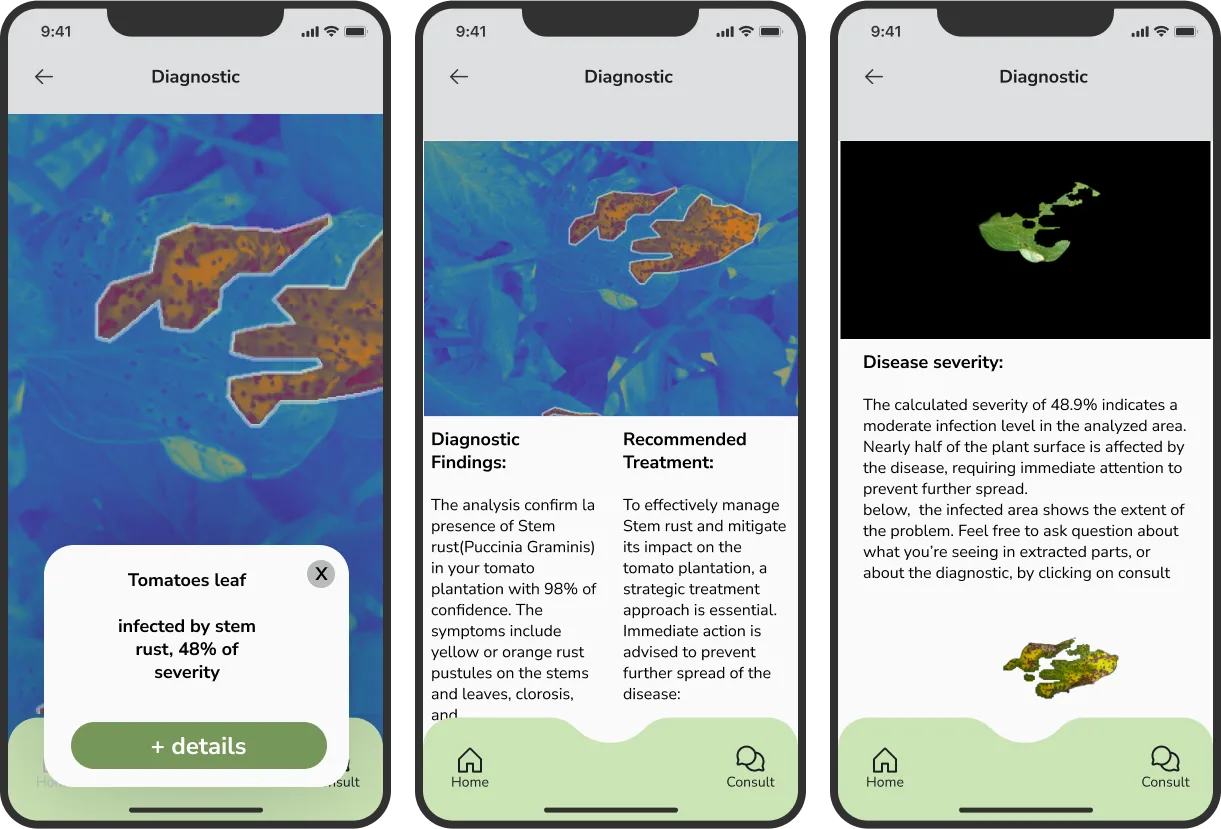

Here’s a sneak peek at how the app currently looks:

Screenshot of the AI-powered mobile app

Screenshot of the AI-powered mobile app

The E2E Strategy

Here's a breakdown of the key building blocks that make up my automated AI plant assistant:Let’s dive into the details of each component:

With this automation pipeline, I can simply label images using the annotation tool, and as soon as I save my work, a new version of my dataset is automatically uploaded to my Google Drive and store a reference of those artifacts in W&B. I’m employing a data persistence strategy, meaning only the modifications (newly labeled images, deletions, or replacements) are uploaded, which significantly saves time and bandwidth.

For monitoring my training progress, I’ve adapted the strategy used by Blue River Technology (now part of John Deere). You can find a detailed explanation of how I replicated their approach in this article. In short, I leverage Weights & Biases (W&B) for comprehensive experiment tracking and visualization.

The best-performing models, determined by an F1 score evaluation, are then prepared for production. This involves converting them to efficient, lite models optimized for mobile deployment and storing them as artifacts within W&B. Finally, the mobile app retrieves the selected model artifact to perform real-time inference on device.

Alright, enough talk! SHOW ME THE CODE (and the specifics)!

Yes, let’s get into the code and the implementation details :D

Data Labeling & versioning:

For image annotation, I’m using an open-source sreeni image-annotator, a Qt-based GUI application (kudos to the developer for their great work!) under MIT licence. I’ve made some modifications to automatically upload every new version of the labeled dataset to my Google Drive integrated with W&B. This Google Drive integration serves as a central repository for the images, registries like segmented region or bounded box localization on the image are saved in database and yml file for each version. I then use W&B Artifacts to version control these datasets. Whenever a new set of annotations is uploaded to Google Drive, a script is triggered to create a new W&B Artifact, linking to the data in Google Drive. This provides a robust, auditable history of my training data.

Model Training & Monitoring:

Fine-tuning models and experimenting with new techniques to achieve the best possible results is the core of the project.

My model training process is primarily executed within Google Colab for its accessibility and free GPU resources. I use a variety of techniques including transfer learning, data augmentation, and custom loss functions, all with the goal of maximizing the accuracy and robustness of my plant disease detection models.

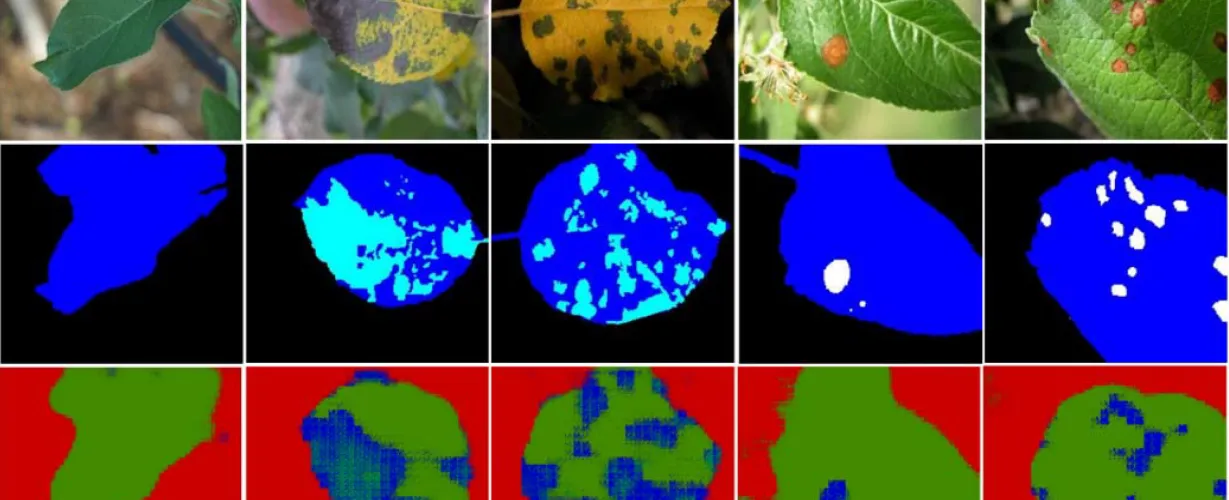

W&B is indispensable for this phase. I track every experiment meticulously, logging key metrics like accuracy, precision, recall, F1-score, and loss curves. I also log visualizations of the model’s predictions on a validation set, which helps me identify areas where the model is struggling.

Challenges and Lessons Learned:

As fulls-tack developper, I usualy develop software features, web apps, server API-based applications, Setting up all this asked me too much devops skills, it took me time to understand those different concepts and assemble the knowledge Ineed to build this solution. it made me see datasets processing differently, like how to avoid and reduce data processing. i’ve spent too long exploring yolo repositories to take insights Ialso learn some react natives pratices and APIs. Optimizing model size for mobile deployment was a major hurdle. I had to experiment with different quantization techniques to reduce the model size without sacrificing too much accuracy, It was hard and complex but it’s been a huge time-saver in the long run.