Rebuilding produts - Blue River ML stack

machine learning devops data analysis

I recently came across some fascinating insights into how Blue River, a company specializing in precision agriculture, approaches machine learning(ML) and I was seriously impressed. They’ve built a highly efficient system for training, deploying, and monitoring ML models, all designed to maximize performance, reproducibility, and efficiency in the field. Let’s dive in!

But how they train and deploy AI Models?

Their mission is to train and deploy AI models that detect (locate in real-time camera frames) crops and weeds. For an agricultural system that needs to spray only the weeds, it needs to do it in Real time and as precise as possible to spray only the weeds and nothing else!

Since these models need to be both accurate and lightweight, they’ve divided their workflow into two key parts:

- Research Workflow – Focused on developing and experimenting with new ML models. They run experiments on their on-premise cluster, using Slurm to manage multiple jobs efficiently.

- Production Workflow – Dedicated to optimizing the best research models for real-time inference on their farming machines. This involves converting models to efficient formats (ONNX, TensorRT) and deploying them on the NVIDIA Jetson AGX Xavier inside their AutoTrac system.

Let’s take a deeper look at both workflows.

Research Workflow

Blue River’s Research Lab operates an on-premise computing cluster (essentially their own supercomputer) to train models. They use Slurm for scheduling and managing complex training jobs, think of it as Kubernetes for High-Performance Computing (HPC).

Here’s a breakdown of their machine learning stack:

- PyTorch: They develop their custom models using PyTorch (which is a great choice YOLOv5 was also built using PyTorch ! spoiler alert: i’ll be using this architecture for demo).

- Weights & Biases (W&B): For experiment tracking, monitoring, and collaboration.

- ONNX & TensorRT: For model optimization and deployment on edge devices(the NVIDIA Jetson AGX Xavier).

I got so excited reading about their system that I rebuilt their ML stack in a project! Check out my repo here.

How I recreated this without a Supercomputer?

Since I don’t have access to an HPC cluster and don’t want to pay for cloud GPUs, I came up with this approach:

- Train different YOLO models on Google Colab using the free GPUs.

- Monitor training with W&B.

- Save the trained PyTorch model(.pt) in W&B Artifacts.

- Download the model (.pt) locally and convert it to ONNX on my PC.

- Package the ONNX model into an inference API (Flask) inside a Docker container.

- Deploy the Docker container on a local Kubernetes cluster.

Data Analysis & Model Training Monitoring

In my repo here, I trained various models using the Crop and Weed Detection dataset from Roboflow.

However, training and deploying models is only half the battle, you also need to monitor their performance. Blue River Technology uses W&B for this, and I found it incredibly useful.

Let’s be honest developing a custom web app from scratch just to monitor training runs would be painful, time-consuming, and expensive. W&B solves this problem effortlessly by providing:

- Real-time tracking of metrics like loss, accuracy, and mAP.

- Experiment logging for easy model comparison.

- Pipeline visualization (DAGs) to track the entire ML workflow.

I used W&B in my project, and it helped me understand why so many machine learning teams and data science teams rely on it. I chose to use YOLOv5 instead of building a PyTorch model from scratch for a faster setup and to get straight to the point. Additionally, its native integration with W&B saved me time.

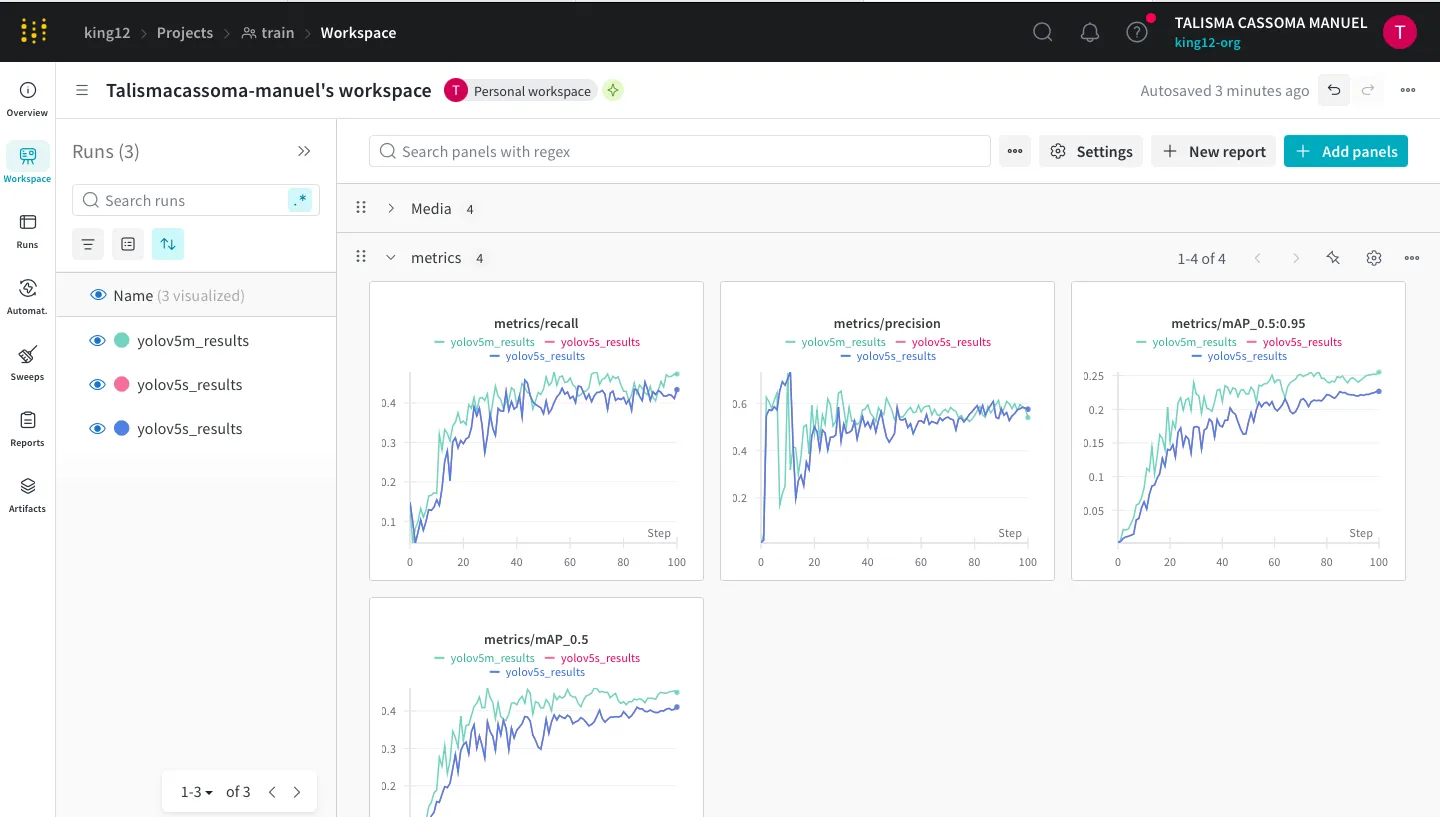

Take look here to my W&B monitoring dashboard below

monitoring dashboard

monitoring dashboard

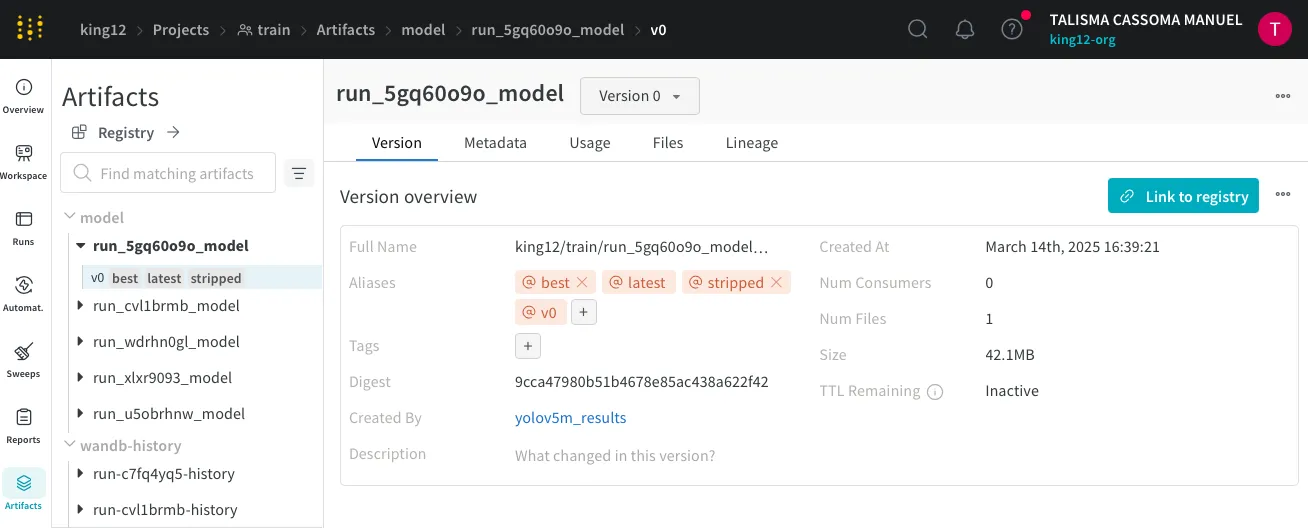

In Blue River, they also use W&B Artifacts to track the datasets used in training, trained model configurations, and evaluation results. This makes experiment reproducibility much easier and ensures that teams always know how a model was trained and deployed, allowing for seamless sharing across teams, all within one software. as you can see here:

saved models in W&B

saved models in W&B

Check out the training Colab notebook here to see how I replicated this setup.

Inference Pipeline: Deploying Models on AutoTrac

So, how do these models actually get deployed on AutoTrac (their AI-driven farming robot)?

Here’s the Blue River deployment pipeline:

The PyTorch JIT (Just-In-Time compilation ) trained models(the best i think) are converted to ONNX format, and from there they use TensorRT to convert to a TensorRT engine file, those models should be saved on Artifactory using a Jenkins CI/CD pipeline. For containers they use Docker and kubernetes clusters.They also utilize an Argo workflow on top of a Kubernetes (K8s) cluster hosted in AWS. For example, the PyTorch training services are deployed to the cloud using Docker.

In my case, I recreated this pipeline locally:

- All trained models (yolov5) on colab are stored in W&B Artifacts.

- I built a microservice that automatically converts these models from W&B Artifacts into ONNX format.

- I also built other micro service, a Flask API designed to serve the models and handle inference requests, it runs inside a Docker container managed by Kubernetes.

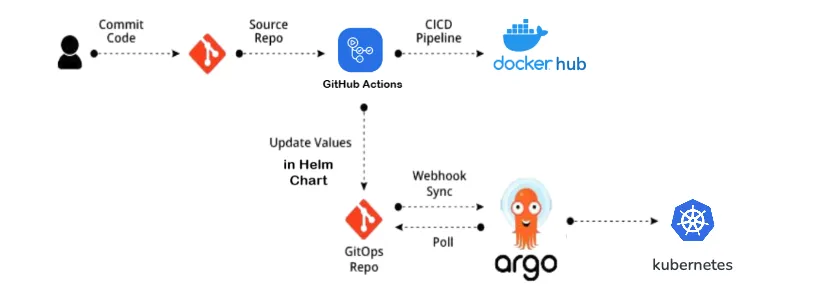

my deploy workflow

my deploy workflow

I set up a Continuous Integration (CI) pipeline using GitHub Actions. You know, a generic setup, on each commit in the main brunch the pipeline automatically builds the Flask API into a Docker container. It then pushes the container to Docker Hub, where it can be accessed by other environments. This automation ensures that the API is always up-to-date, with no manual intervention needed.

Finally, to deploy the API at scale, I turned to Kubernetes. On my local Kubernetes cluster, I configured it to pull the latest Docker container from Docker Hub and deploy it as a scalable service. Kubernetes handles scaling, load balancing, and managing the service, making sure the inference API is always available and ready to serve predictions. The code lays in repository.

Final Thoughts:

Blue River Technology’s ML stack is an excellent example of how to efficiently train, deploy, and monitor AI models for real-world applications. Their setup ensures:

Scalability

Optimization

Reproducibility

Automation

By following a similar approach, I was able to rebuild this stack using free and local resources a great way to experiment with real-world ML deployment.

What do you think? Any part of this stack that interests you the most?

sources

Blue River posts:

- https://medium.com/pytorch/ai-for-ag-production-machine-learning-for-agriculture-e8cfdb9849a1

- https://developer.nvidia.com/blog/how-ai-and-robotics-are-driving-agricultural-productivity-and-sustainability/

Dataset: